Interpretable Machine Learning

MICCAI 2018 Tutorial

|

Sunday, 16 September 2018

|

|

|

Time: 15:00 - 19:00 |

|

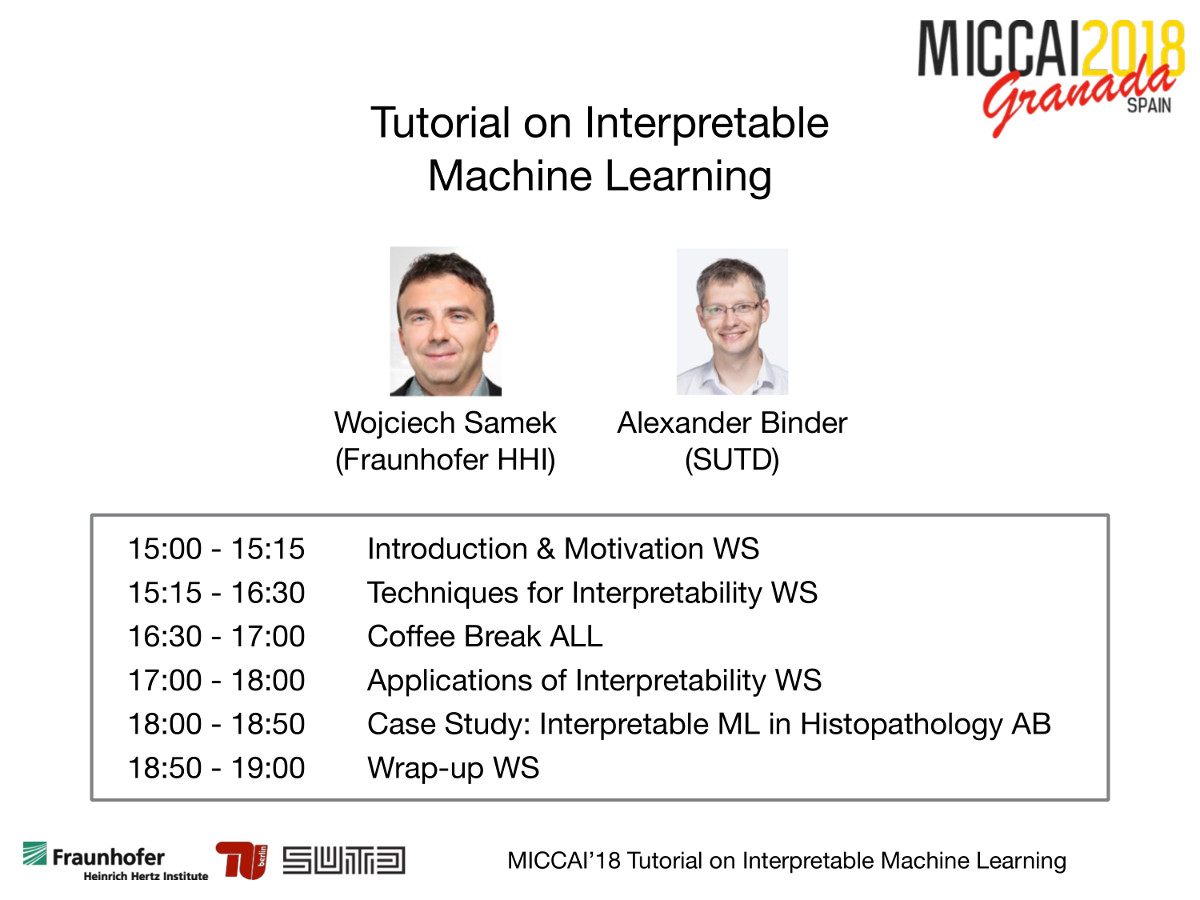

Schedule

| 15:00-15:15 | Introduction & Motivation |

| 15:15-16:30 | Techniques for Interpreting Deep Models |

| 16:30-17:00 | Coffee Break |

| 17:00-18:00 | Applications of Interpretability |

| 18:00-19:00 | Case Study: Interpretable Machine Learning in Histopathology |

Slides

|

Summary

Powerful machine learning algorithms such as deep neural networks (DNNs) are now able to harvest very large amounts of training data and to convert them into highly accurate predictive models. DNN models have reached state-of-the-art accuracy in a wide range of practical appli- cations. At the same time, DNNs are generally considered as black boxes, because given their nonlinearity and deeply nested structure it is difficult to intuitively and quantitatively understand their inference, e.g. what made the trained DNN model arrive at a particular decision for a given data point. This is a major drawback for applications where interpretability of the decision is an inevitable prerequisite.

For instance, in medical diagnosis incorrect predictions can be lethal, thus simple black-box predictions cannot be trusted by default. Instead, the predictions should be made interpretable to a human expert for verification.

In the sciences, deep learning algorithms are able to extract complex relations between physical or biological observables. The design of interpretation methods to explain these newly inferred relations can be useful for building scientific hypotheses, or for detecting possible artifacts in the data/model.

Also from an engineer’s perspective interpretability is a crucial feature, because it allows to identify the most relevant features and parameters and more generally to understand the strengths and weaknesses of a model. This feedback can be used to improve the structure of the model or speedup training.

Recently, the transparency problem has been receiving more attention in the deep learning community. Several methods have been developed to understand what a DNN has learned. Some of this work is dedicated to visualize particular neurons or neuron layers, other work fo- cuses on methods which visualize the impact of particular regions of a given input image. An important question for the practitioner is how to objectively measure the quality of an explana- tion of the DNN prediction and how to use these explanations for improving the learned model.

Our tutorial will present recently proposed techniques for interpreting, explaining and visualizing deep models and explore their practical usefulness in computer vision.

For background material on the topic, see our reading list.

Outline of the tutorial

1. Deep Learning & Interpretability- The need of interpretability in medical research

- Definitions of interpretability

2. Interpreting Deep Representations

- Visualizing filters

- Activation maximization

3. Explaining the Prediction of a Machine Learning Model

- Explaining linear models, support vector machines, deep neural network etc.

- Gradient-based techniques (e.g. sensitivity analysis)

- Decomposition-based techniques (e.g. layer-wise relevance propagation)

- Saliency vs. Decomposition

4. Applications

- How to evaluate quality of explanations ?

- What can we learn from interpretable models ?

- Interpretability in practice (examples from computer vision, nlp, sciences ...)

5. Case-study: Interpretable Machine Learning in Histopathology

Organizers

Wojciech Samek, Fraunhofer Heinrich Hertz InstituteFrederick Klauschen, Charité University Hospital Berlin

Klaus-Robert Müller, Technische Universität Berlin