Interpreting and Explaining Deep Models in Computer Vision

CVPR 2018 Tutorial

|

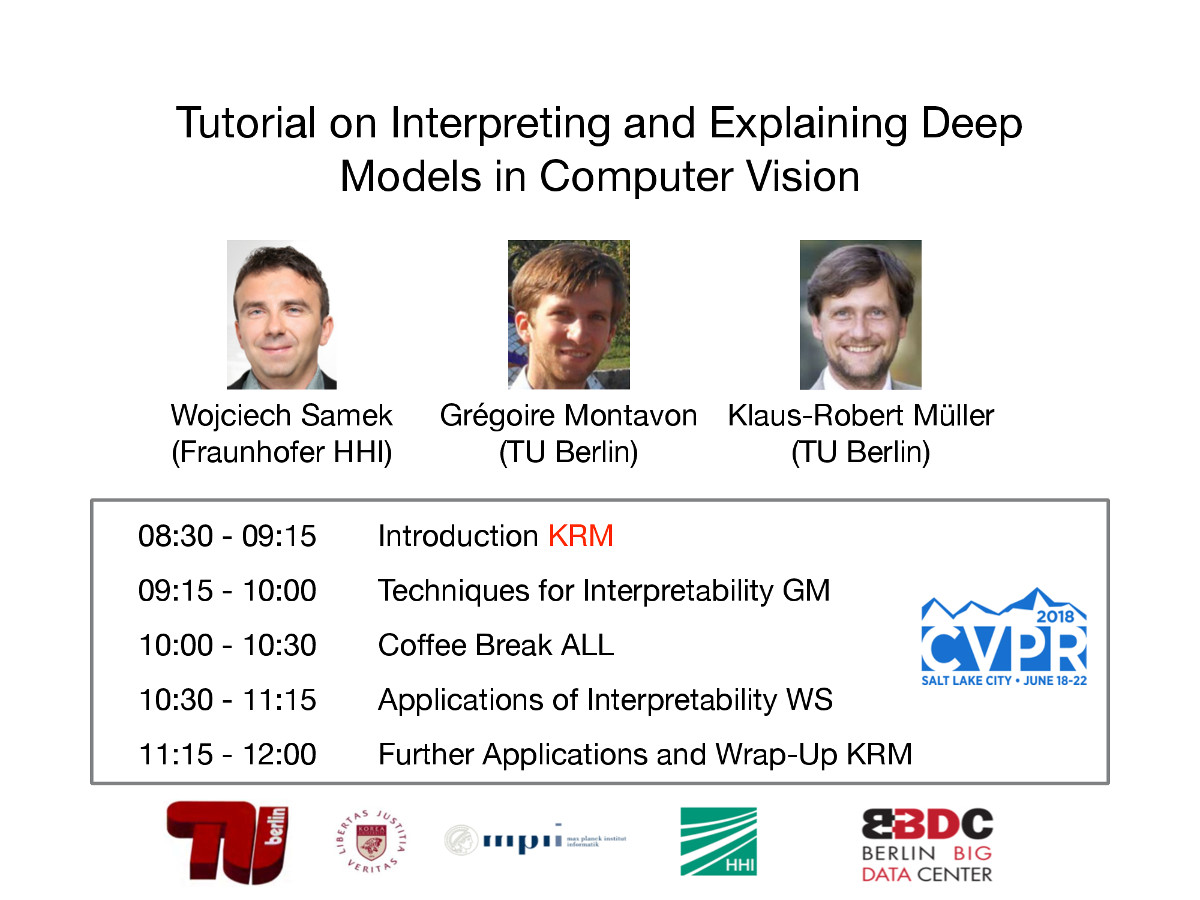

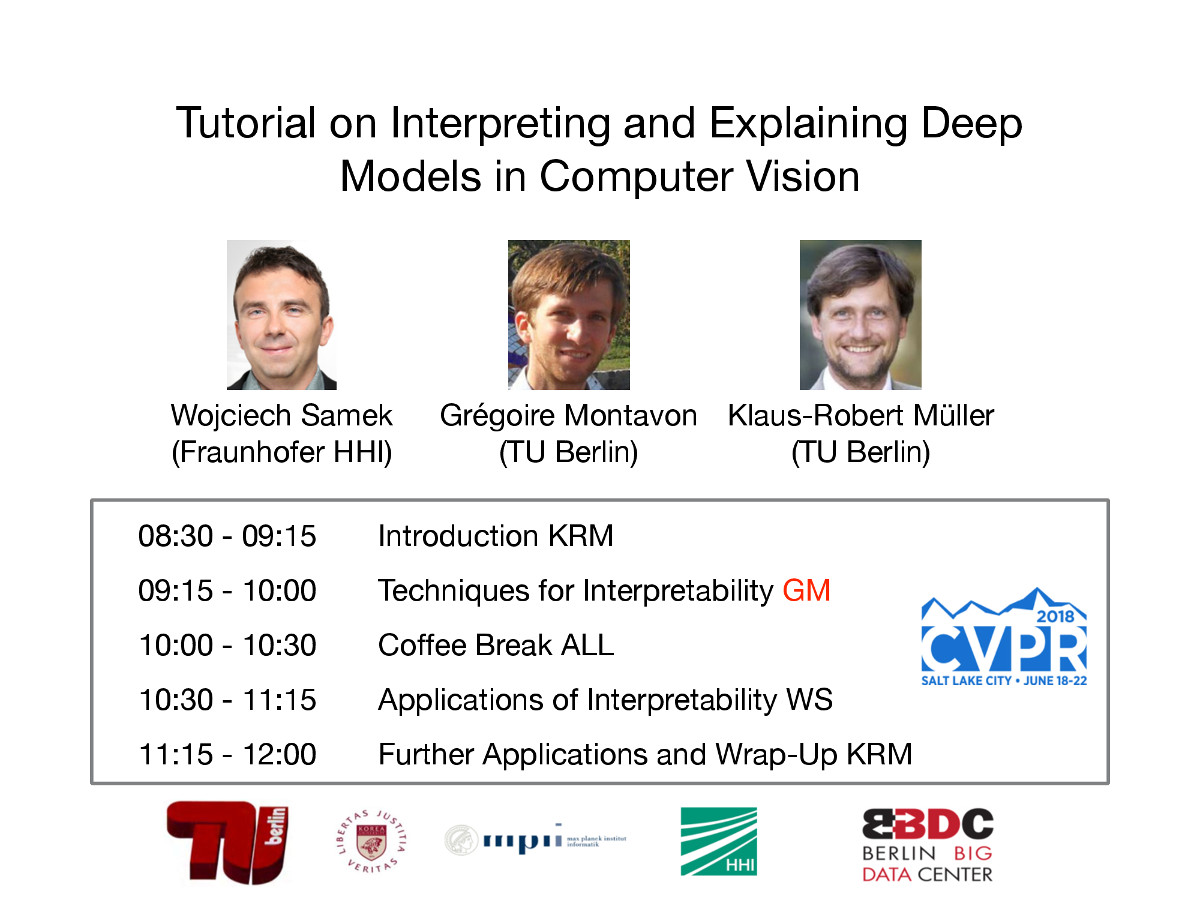

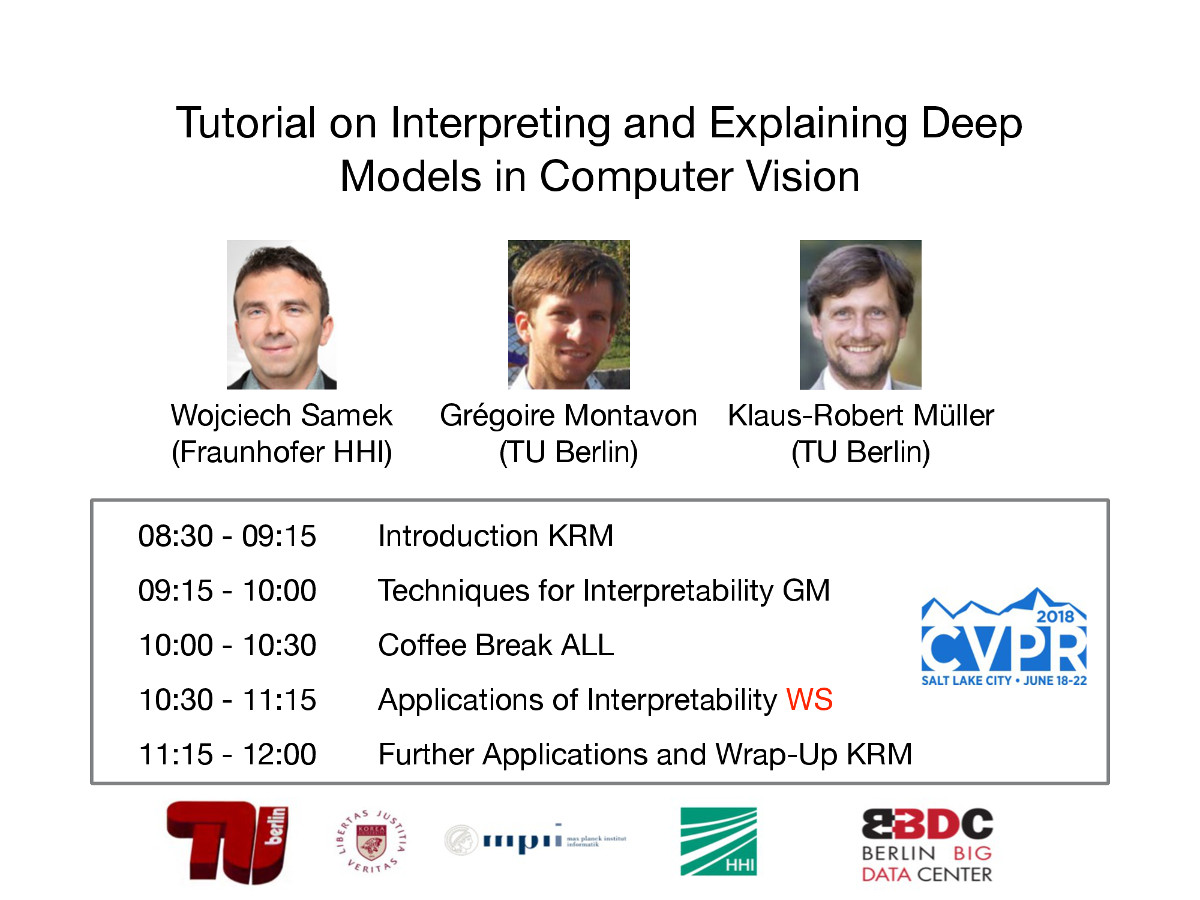

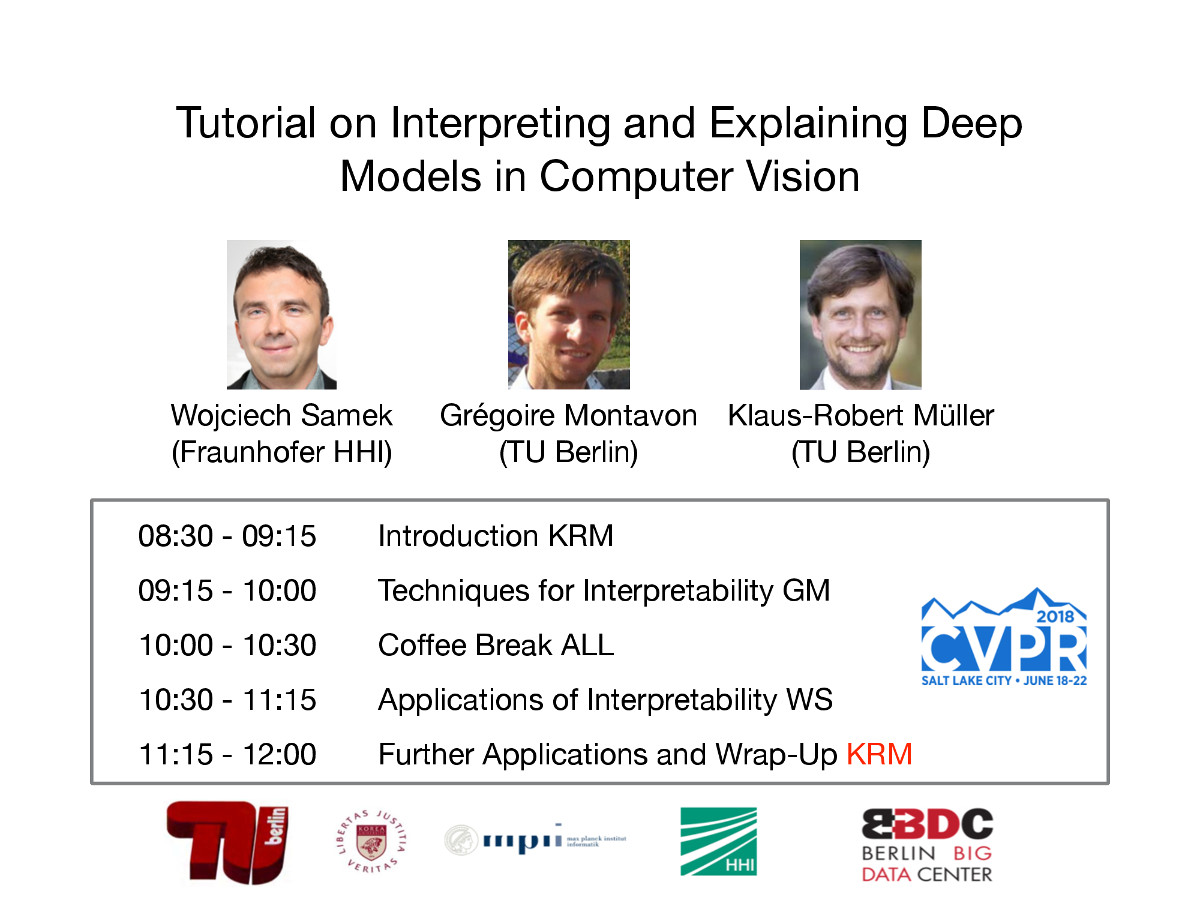

Monday, 18 June 2018

|

|

|

Time: 8.30 am - 12.00 pm. Location information: Room 355 EF. |

|

Schedule

| 08:30-09:15 | Introduction |

| 09:15-10:00 | Techniques for Interpretability |

| 10:00-10:30 | Coffee Break |

| 10:30-11:15 | Applications of Interpretability |

| 11:15-12:00 | Further Applications and Wrap-Up |

Summary

Machine learning techniques such as deep neural networks (DNN) are able convert large amounts of data into highly predictive models. In complement to their unmatched predictive capability, it is becoming increasingly important to understand qualitatively and quantitatively how these models decide.

Our tutorial will provide a broad overview of techniques for interpreting deep models, and how some of these techniques can be made useful on practical problems. In the first part we will lay a taxonomy of these methods, and explain how the various interpretation techniques can be characterized conceptually and mathematically. The second part of the tutorial will explain when and why we need interpretability.

For background material on the topic, see our reading list.

Outline of the tutorial

1. Definitions of interpretability2. Techniques for understanding deep representations & explaining individual predictions of a DNN

3. Approaches to quantitatively evaluate interpretability

4. Using interpretability in practice (validate deep models, identifying biases & flaws in the dataset, understand invariances of the model)

5. Extracting new insights in complex systems with interpretable models

Slides

| part 1 | part 2 | part 3 | part 4 | |

|

|

|

|

Videos

Organizers

Wojciech Samek, Fraunhofer Heinrich Hertz InstituteGrégoire Montavon, Technical University Berlin

Klaus-Robert Müller, Technical University Berlin